Docker is a popular open source tool that provides a portable and consistent runtime environment for software applications. Docker uses containers as isolated environments in user space that run at the operating system level and share the file system and system resources. Containerisation consumes significantly fewer resources than a traditional server or virtual machine. Everything important for use in practice and the most important questions about Docker can be found explained simply here. You can find a dedicated video about the topic “Deploy OPC Router in the Docker Container” by visiting our tutorial stream.

1. What is Docker?

Docker, or Docker technology, is an open source technology. At its core, it is used to develop, distribute and run applications.

Docker allows you to isolate applications from a local infrastructure. This makes software deployment faster, easier and more secure than ever before.

1.1. What are the core properties?

The core property of Docker is that applications are encapsulated in so-called Docker containers. They can thus be used for any system running a Linux, Macintosh or Windows operating system. While other container systems have existed for some time, Docker became popular because it provides a more accessible and comprehensive interface for this technology. It also created a public software repository of base container images that users can build on when creating containerised environments to run their applications.

1.2. What is the main advantage of Docker?

With Docker, you can ensure that the functionality of your applications can be executed in any environment. This advantage arises because all applications and their dependencies are brought together in a Docker execution container.

This also means that DevOps professionals (Development and IT Operations), for example, can generate a wide variety of applications with Docker, ensuring that they do not interfere with each other. Containers can be created on which different applications are installed, and the container can then be handed over to quality assurance. The latter then only has to run the container to test all processes and functions independently of the system. Therefore, using Docker tools saves time and, unlike using Virtual Machines (VMs), you don’t have to worry about which platform you are using:

Docker containers work everywhere.

2. What exactly is a Docker container?

A Docker-Container is a standard software unit that stores a code in all its dependencies. This makes the application run quickly and reliably on a wide variety of computing environments. A Docker container represents a lightweight standalone executable software package that contains everything needed to run an application code runtime:

- Program code

- RunTime engines

- System tools

- System libraries

- Settings

This containerised software always runs the same on Linux, Mac and Windows-based systems, regardless of infrastructure. Docker containers isolate the software from the environment and ensure that it works consistently despite differences.

2.1. What are the advantages of containers?

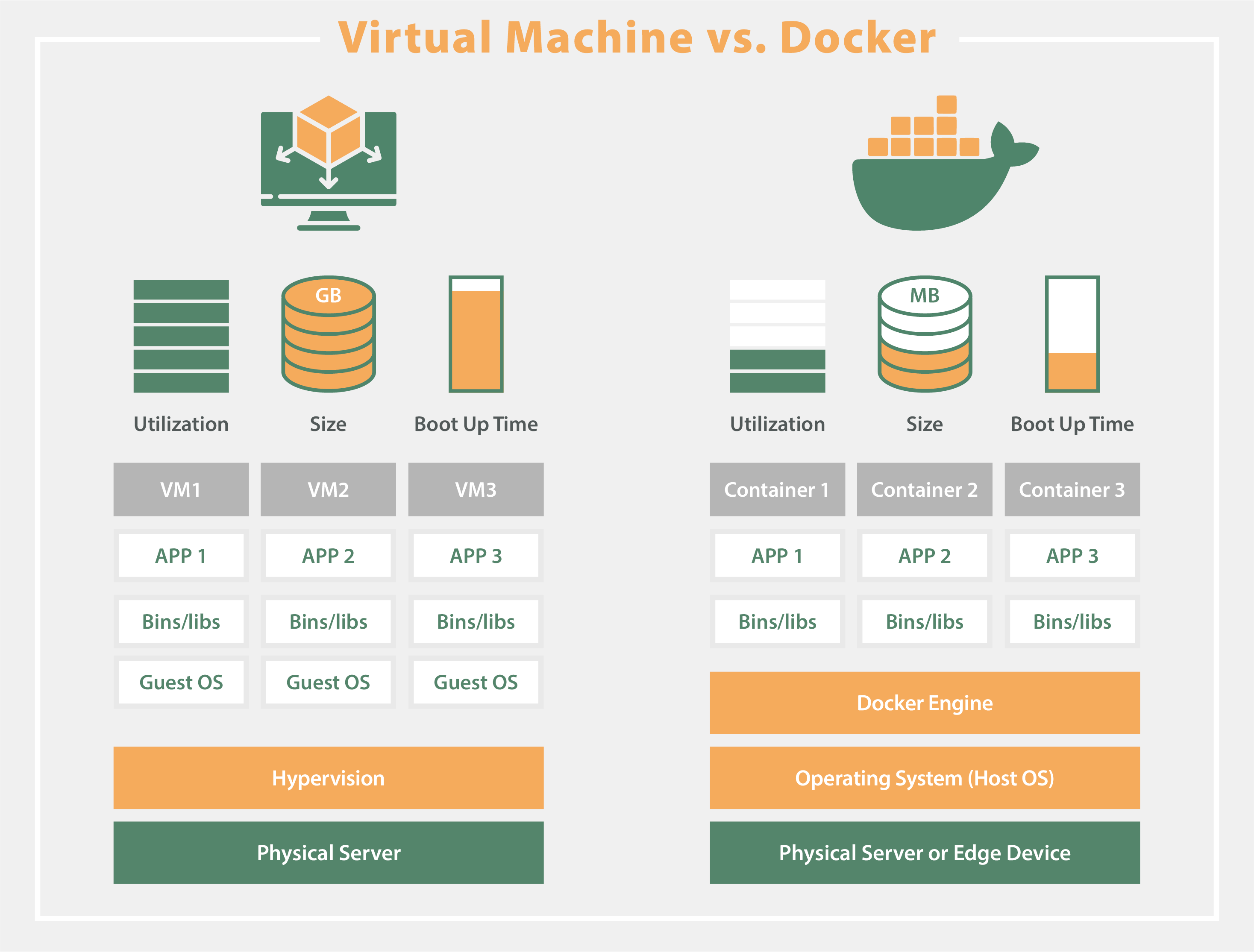

Docker containers are particularly popular because they offer many advantages over virtual machines (VMs). VMs basically contain complete copies of a powerful operating system, the application itself, all the necessary binaries and libraries. This usually takes up dozens of gigabytes of storage capacity. VMs, unlike Docker containers, can also be slow to boot. Docker containers, on the other hand, require less storage space because their images are usually only tens of megabytes in size. Thus, when Docker is used, more applications can be processed and fewer VMs and operating systems can be used. It is also easy to use the containers on edge devices, e.g. on compact single-board computers such as the Raspberry Pi or robust and low-maintenance embedded PCs in industry.

Docker containers are therefore more flexible and effective, and the use of Docker in the cloud is very popular. The ability for different applications to run on a single operating system instance improves diverse deployment options immensely. A key advantage of Docker containers is the ability to isolate apps not only from each other, but also from their underlying system. This makes it easy for the user to determine how an assigned containerised unit uses the respective system and the available resources (CPU, GPU and network). In addition, it also ensures that data and code remain separate from each other.

3. What components are there?

The Docker platform consists of a number of components, of which Docker Desktop and Docker Engine are two important parts. Docker images define the content of containers. Docker containers are executable instances of images. The Docker daemon is a background application that manages and runs Docker images and containers. The Docker Client is a command line interface (CLI) that calls the API of the Docker Daemon. Docker registries contain images, and Docker Hub is a widely used public registry. Much of Docker (but not Desktop) is open source under the Apache V2 licence.

3.1 Docker-Desktop

While most Docker components are available for Windows, Mac and Linux, and despite the fact that most Docker containers run on Linux, Desktop is only available for Windows and Mac. Docker Desktop is a GUI (Graphical User Interface) tool that essentially covers a virtual machine installation. This virtual machine is not necessary on Linux, as the Docker Engine can be run directly here. Docker Desktop is used on Windows and Mac to manage various Docker components and functions, including containers, images, volumes (storage attached to containers), local Kubernetes (an automation of deployment, scaling and management of container applications), development environments within containers and more.

3.2 Docker-Images / Docker-Registry

A Docker image is an immutable file that is essentially a snapshot of a container. Images are created with the “build” command and create a container when started with “run”. Images are stored in a Docker registry such as “registry.hub.docker.com”. They are designed so that they can consist of several layers of other images, so that only a small amount of data needs to be transferred when images are sent over the network.

3.3 Docker-Container

If one were to speak in the language of programmers, an image would be a class and a container would be an instance of a class – a runtime object. Containers are the reason why Docker is used in so many ways: They are lightweight and portable encapsulations of an environment in which applications can be run (see also “What exactly is a Docker container?“).

3.4 Docker-Engine

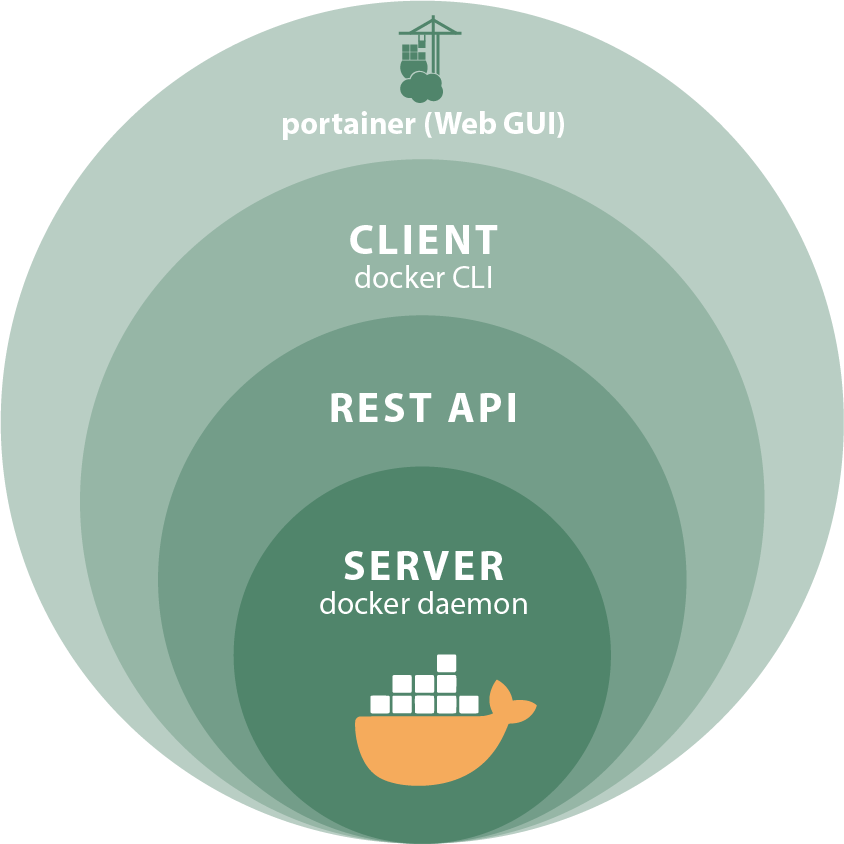

Let’s first take a look at the Docker Engine and its components so we have a basic idea of how the system works. With the Docker Engine, you can develop, assemble, deliver and run applications using the following components:

3.4.1 Docker Daemon

A persistent background process that manages Docker images, containers, networks and storage volumes. The Docker daemon constantly “watches” for Docker API requests and processes them.

3.4.2 Docker Engine REST API

An API used by applications to interact with the Docker daemon; it can be accessed via an HTTP client.

3.4.3 Docker Client / Docker CLI

The Docker client allows users to interact with Docker. It can be on the same host as the daemon or connect to a daemon on a remote host and communicate with more than one daemon. The Docker Client provides a command line interface (CLI = Command Line Interpreter) through which you can issue commands to a Docker daemon to create, run and stop applications.

The main purpose of the Docker Client is to provide a means to pull images from a registry and run them on a Docker host. Common commands issued by a client are:

- docker build

- docker pull

- docker run

3.4.4 Portainer

To simplify the work with Docker commands, the free, intuitive and easy-to-implement GUI (Graphical User Interface) Portainer is often the first choice. The administration of Docker Engines and the complete management can be perfectly enabled with Portainer, even for small Docker projects. Hardware information such as the number of processors and the size of the RAM, as well as Docker-specific information (number of containers, images, volumes and networks) are visible at a glance. Thus, the Docker tool with its clear web interface can easily take over the standard Docker functions and administration.

3.5 Docker Compose

3.6 Kubernetes

Kubernetes is an open source system that uses process automation to manage containers and therefore works hand-in-hand with Docker. The configuration data is transferred to Kubernetes in a Helm Chart. There, it will be configured which containers are to be rolled out, monitored and managed. Kubernetes solves this task easily even with a high number of containers.

In addition, Kubernetes can make perfect use of resources. Machines that are not needed can either be switched off or reassigned to other tasks to save costs and capacities.

The OPC Router is also aware of the working connection between Kubernetes and Docker. The OPC Router is compatible with Kubernetes and provides corresponding helmet charts. For example, the OPC Router Docker Sample, which processes data from an OPC UA server, transfers it to an MS SQL server and visualizes this data in a Grafana dashboard (OPC Router Docker Sample at Github).

3.7 Docker Swarm

Docker Swarm is a technology used by IT specialists to manage and orchestrate containers in a network. It helps make applications scalable and robust. Docker Swarm coordinates how containers run in a cluster. A cluster is a group of computers working together. With Docker Swarm, you can easily add or remove containers to adjust to computing needs. If a container fails, Docker Swarm automatically starts a new one to ensure the application keeps running. Docker Swarm provides simple commands to start, stop, and monitor containers.

There are many reasons to use Docker Swarm. It saves time and resources by automating the management of containers. It supports various types of applications and workloads and offers built-in security features like encryption and certificates.

4. On which operating systems does Docker run?

A Docker container runs with the Docker Engine directly under Linux and on any host computer that supports the container runtime environment “Docker Desktop” (Macintosh and Windows). You do not have to adapt the applications to the host operating system. This allows both the application environment and the underlying operating system environment to be kept clean and minimal. Container-based applications can thus be easily moved from systems in cloud environments or from developer laptops to servers if the target system supports Docker and all third-party tools used with it.

4.1 How to install Docker?

The current versions including installation instructions of the Docker Desktop software can be found here:

- Installation instructions for Docker Desktop (Windows)

- Installation instructions for Docker Desktop (Mac)

Information on installing the Docker Engine under various Linux derivatives and the current versions can be found here:

4.2 Docker Sample with GitHub?

Deploying the OPC Router in a Docker container has some advantages. For the first application, we provide a Docker sample on Github that contains a very simple and practical example that you can use to create your first project in a Docker container.

5. What is the microservices model?

Most business applications consist of several separate components organised in a so-called “stack”, for example a web server, a database and an in-memory cache. Containers allow you to assemble these individual components into a functional unit with easily replaceable parts. Different containers can then contain these components. This allows each component to be maintained, updated and modified independently of the others. Basically, this is the microservices model of application design.

By dividing application functionality into separate and self-contained services, the model offers an alternative to slow traditional development processes and inflexible apps. Lightweight transferable containers make it easier to create and maintain microservices-based applications.

6. Summary

Now that we have learned about the different components of the Docker architecture and how they work together, we can understand the growing popularity of Docker containers and microservices. Docker helps to simplify the management of an infrastructure by making the underlying instances lighter, faster and more robust. In addition, Docker separates the application layer from the infrastructure layer, bringing much-needed portability, collaboration and control to the software delivery chain. Docker is designed for modern DevOps teams, and understanding its architecture can help you get the most out of your containerised applications.

Manage applications and services with Docker

The open source tool Docker offers the possibility to connect a machine to IT on a Linux-, Mac- and Windows-based system. Docker systems have a minimized operating system and therefore require less computing power than a virtual machine, for example.

With software like OPC Router, deploying a container on an edge device is easy. With just a few clicks, the software is installed on the edge device via a Docker container and ready for use. This allows many different use cases to be realized. For example, machine data can be easily transferred to the cloud, ERP systems such as SAP or databases. Of course, other ERP systems can also be connected, such as web services or Rest. The connection of an industrial printer, such as Zebra, Domino, VideoJet, Wolke or many more, via an edge device is also possible without any problems. The great variety as well as the flexibility and effectiveness of a Docker container make it very popular in practice.

Further information

The REST Plug-in enables the OPC Router to query and address REST web services. Almost every system can be connected via a REST API and thus it is possible to transfer data to and retrieve data from these systems. REST functions are also called via a REST trigger. This enables a separate REST API structure for a wide range of systems that are connected with other available Plug-ins.

A Telegram Bot can be used to transmit information from almost every imaginable area whenever it is needed. We refer to the special bot function of this messenger in our article in the Knowledge Base “Technology” and also provide information on how you can create a Telegram bot yourself.

With its Plug-ins, the OPC Router has connections to a wide variety of systems and thus also access to their data. With the InfluxDB/InfluxDB2 Plug-in, it is possible to transfer this data directly to the Influx databases and establish connections to InfluxDB2 instances and the InfluxDB Cloud. In this way, you can establish a connection with just a few actions and without complicated settings in the database.

Further interesting articles on the topics of Industry 4.0, cloud, technology, alerting as well as practical application examples and case studies can be found in our Knowledge Base.